Gary Marcus has criticised a prediction by Elon Musk that AGI (Artificial General Intelligence) will be achieved by 2029 and challenged him to a $100,000 bet.

Marcus founded RobustAI and Geometric Intelligence (acquired by Uber), is the Professor Emeritus of Psychology and Neural Science at NYU, and authored Rebooting.AI. His views on AGI are worth listening to.

AGI is the kind of artificial intelligence depicted in movies like Space Odyssey (‘HAL’) and Iron Man (‘J.A.R.V.I.S’). Unlike current AIs that are trained for a specific task, AGIs are more like the human brain and can learn how to do tasks.

Most experts believe AGI will take decades to achieve, while some even think it will never be possible. In a survey of leading experts in the field, the average estimate was there is a 50 percent chance AGI will be developed by 2099.

Elon Musk is far more optimistic:

2029 feels like a pivotal year. I’d be surprised if we don’t have AGI by then. Hopefully, people on Mars too.

— Elon Musk (@elonmusk) May 30, 2022

Musk’s tweet received a response from Marcus in which he challenged the SpaceX and Tesla founder to a $100,000 bet that he’s wrong about the timing of AGI.

AI expert Melanie Mitchell from the Santa Fe Institute suggested the bets are placed on longbets.org. Marcus says he’s up for the bet on the platform – where the loser donates the money to a philanthropic effort – but he’s yet to receive a response from Musk.

In a post on his Substack, Marcus explained why he’s calling Musk out on his prediction.

“Your track record on betting on precise timelines for things is, well, spotty,” wrote Marcus. “You said, for instance in 2015, that (truly) self-driving cars were two years away; you’ve pretty much said the same thing every year since. It still hasn’t happened.”

Marcus argues that pronouncements like Musk is famous for can be dangerous and take attention away from the kind of questions that first need answering.

“People are very excited about the big data and what it’s giving them right now, but I’m not sure it’s taking us closer to the deeper questions in artificial intelligence, like how we understand language or how we reason about the world,” said Marcus in 2016 in an Edge.org interview.

An incident in April, where a Tesla on Autopilot crashed into a $3 million private jet in a mostly empty airport, is pointed to as an example of why the focus needs to be on solving serious issues with AI systems before rushing to AGI:

“It’s easy to convince yourself that AI problems are much easier than they are actually are, because of the long tail problem,” argues Marcus.

“For everyday stuff, we get tons and tons of data that current techniques readily handle, leading to a misleading impression; for rare events, we get very little data, and current techniques struggle there.”

Marcus says that he can guarantee Musk won’t be shipping fully-autonomous ‘Level 5’ cars this year or next, despite what Musk said at TED2022. Unexpected outlier circumstances, like the appearance of a private jet in the way of a car, will continue to pose a problem to AI for the foreseeable future.

“Seven years is a long time, but the field is going to need to invest in other ideas if we are going to get to AGI before the end of the decade,” explains Marcus. “Or else outliers alone might be enough to keep us from getting there.”

Marcus believes outliers aren’t an unsolvable problem, but there’s currently no known solution. Making any predictions about AGI being achievable by the end of the decade before that issue is anywhere near solved is premature.

Along those same lines, Marcus points at how deep learning is “pretty decent” at recognising objects is but nowhere near as adept at human brain-like activities such as planning, reading, or language comprehension.

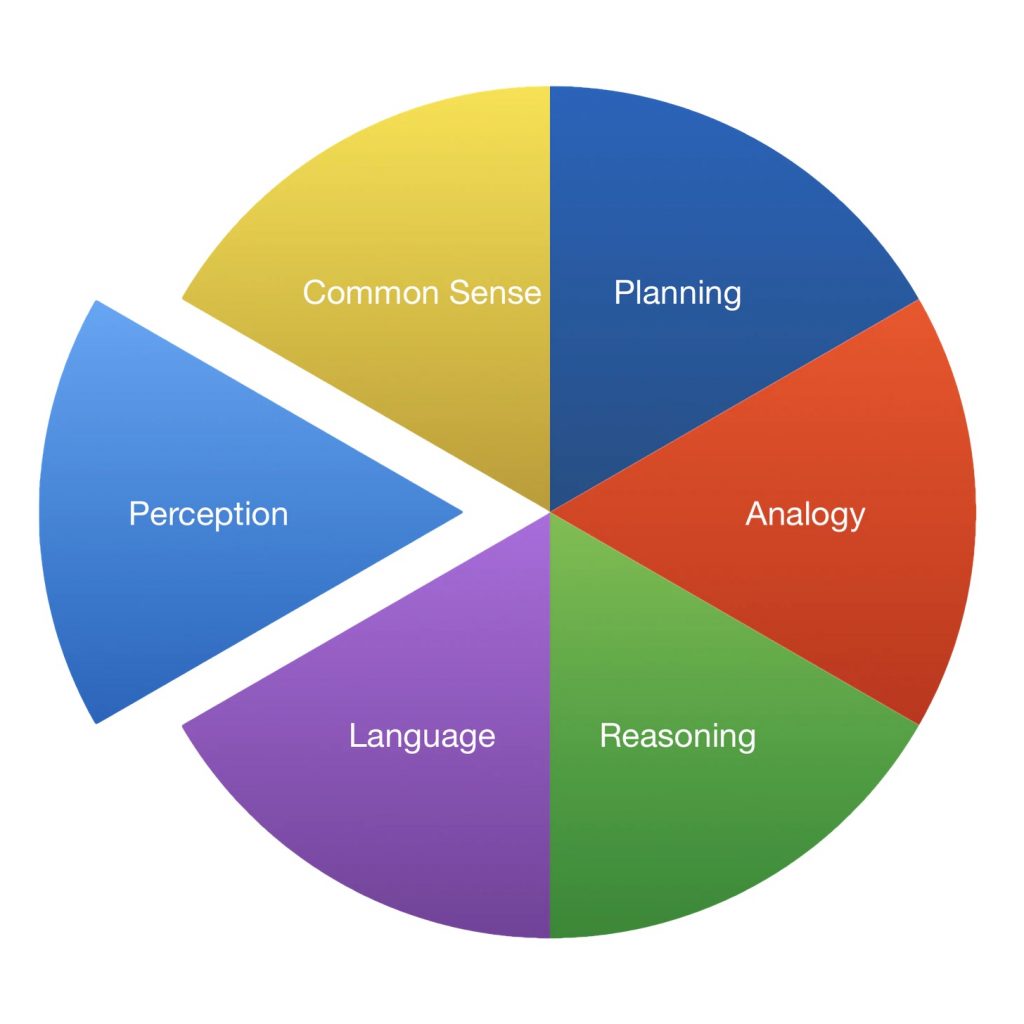

Here’s a pie chart used by Marcus of the kind of things that an AGI would need to achieve:

Marcus points out that he’s been using the chart for around five years and the situation has barely changed, we “still don’t have anything like stable or trustworthy solutions for common sense, reasoning, language, or analogy.”

Tesla is currently building a robot that claims to be able to perform mundane tasks around the home. Marcus is sceptical given the problems that Tesla is having with its cars on the roads.

“The AGI that you would need for a general-purpose domestic robot (where every home is different, and each poses its own safety risks) is way beyond what you would need for a car that drives on roads that are more or less engineered the same way from one town to the next,” he reasons.

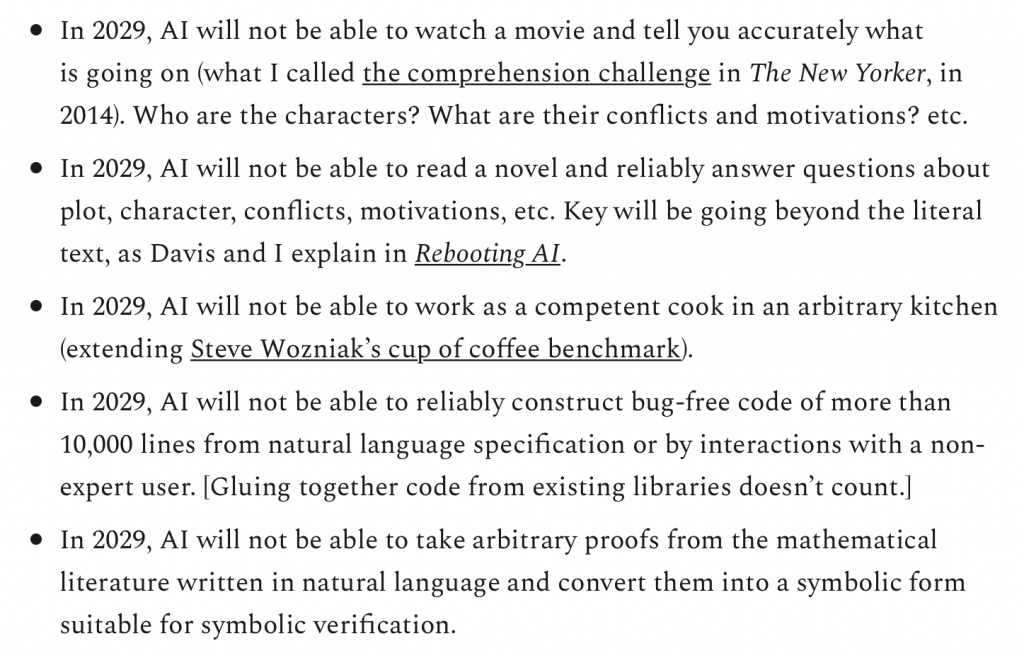

Because AGI is still a somewhat vague term that’s open to interpretation, Marcus makes his own five predictions that AI will not be able to do by Musk’s 2029 prediction that AGI will be achieved:

Well then, Musk—do you accept Marcus’ challenge? Can’t say I would, even if I had anywhere near Musk’s disposable income.

(Photo by Kenny Eliason on Unsplash)

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.