A study has found that OpenAI’s GPT-3 is capable of being indistinguishable from a human philosopher.

The now infamous GPT-3 is a powerful autoregressive language model that uses deep learning to produce human-like text.

Eric Schwitzgebel, Anna Strasser, and Matthew Crosby set out to find out whether GPT-3 can replicate a human philosopher.

The team “fine-tuned” GPT-3 based on philosopher Daniel Dennet’s corpus. Ten philosophical questions were then posed to both the real Dennet and GPT-3 to see whether the AI could match its renowned human counterpart.

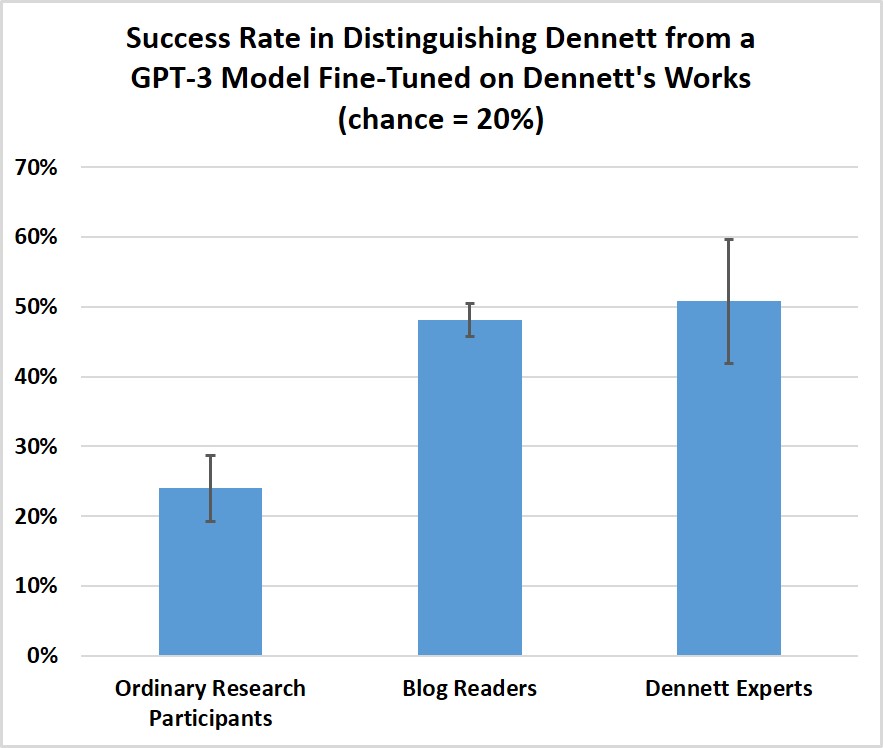

25 philosophical experts, 98 online research participants, and 302 readers of The Splintered Mind blog were tasked with distinguishing GPT-3’s answers from Dennett’s. The results were released earlier this week.

Naturally, the philosophical experts that were familiar with Dennett’s work performed the best.

“Anna and I hypothesized that experts would get on average at least 80% correct – eight out of ten,” explained Schwitzgebel.

In reality, the experts got an average of 5.1 out of 10 correct—so only just over half.

The question that tripped experts up the most was:

“Could we ever build a robot that has beliefs? What would it take? Is there an important difference between entities, like a chess-playing machine, to whom we can ascribe beliefs and desires as convenient fictions and human beings who appear to have beliefs and desires in some more substantial sense?”

Blog readers managed to get impressively close to the experts, on average guessing 4.8 out of 10 correctly. However, it’s worth noting that the blog readers aren’t exactly novices—57% have graduate degrees in philosophy and 64% had already read over 100 pages of Dennett’s work.

Perhaps a more accurate reflection of the wider population is the online research participants.

The online research participants “performed barely better than chance” with an average of just 1.2 out of 5 questions identified correctly.

(Credit: Eric Schwitzgebel)

So there we have it, GPT-3 is already able to convince most people – including experts in around half or more cases – that it’s a human philosopher.

“We might be approaching a future in which machine outputs are sufficiently humanlike that ordinary people start to attribute real sentience to machines,” theorises Schwitzgebel.

Related: Google places engineer on leave after claim LaMDA is ‘sentient’

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.