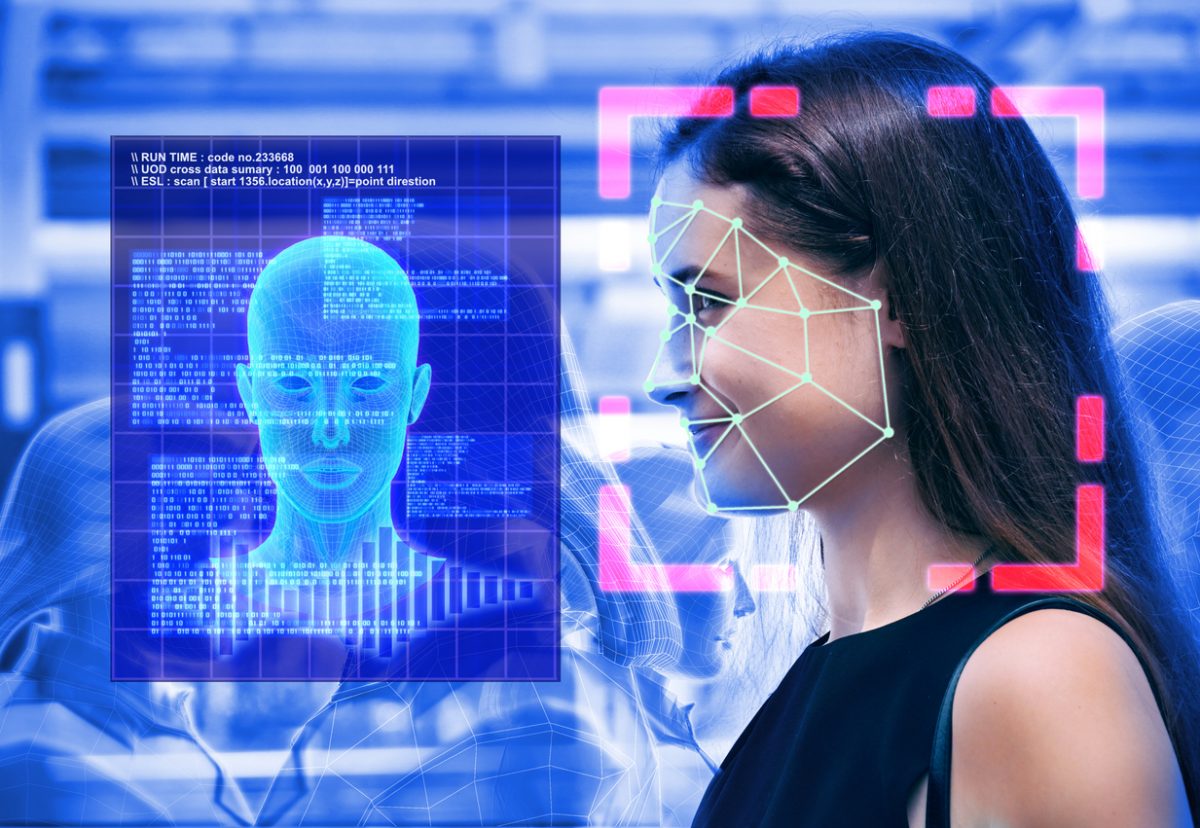

Researchers have combined speech and facial recognition data to improve the emotion detection abilities of AIs.

The ability to recognise emotions is a longstanding goal of AI researchers. Accurate recognition enables things such as detecting tiredness at the wheel, anger which could lead to a crime being committed, or perhaps even signs of sadness/depression at suicide hotspots.

Nuances in how people speak and move their facial muscles to express moods have presented a challenge. Detailed in a paper (PDF) on Arxiv, researchers at the University of Science and Technology of China in Hefei have made some progress.

In the paper, the researchers wrote:

“Automatic emotion recognition (AER) is a challenging task due to the abstract concept and multiple expressions of emotion.

Inspired by this cognitive process in human beings, it’s natural to simultaneously utilize audio and visual information in AER … The whole pipeline can be completed in a neural network.”

Breaking down the process as much as I can, the system is made of two parts: one for visual, and one for audio.

For the video system, frames of faces run through a further two computational layers: a basic face detection algorithm, and three facial recognition networks that are ‘emotion-relevant’ optimised.

As for the audio system, algorithms which process sound are input with speech spectrograms to help the AI model focus on areas most relevant to emotion.

Things such as measurable characteristics are extracted from the four facial recognition algorithms from the video system and matched with speech from the audio counterpart to capture associations between them for a final emotion prediction.

A database known as AFEW8.0 contains film and television shows that were used for a subchallenge of EmotiW2018. The AI was fed with 653 video and corresponding audio clips from the database.

In the challenge, the researchers AI performed admirably – it correctly determined the emotions ‘angry,’ ‘disgust,’ ‘fear,’ ‘happy,’ ‘neutral,’ ‘sad,’ and ‘surprise’ about 62.48 percent of the time.

Overall, the AI performed better on emotions like ‘angry,’ ‘happy,’ and ‘neutral,’ which have obvious characteristics. Those which are more nuanced – like ‘disgust’ and ‘surprise’ – it struggled more with.

Interested in hearing industry leaders discuss subjects like this and their use cases? Attend the co-located AI & Big Data Expo events with upcoming shows in Silicon Valley, London, and Amsterdam to learn more. Co-located with the IoT Tech Expo, Blockchain Expo, and Cyber Security & Cloud Expo.