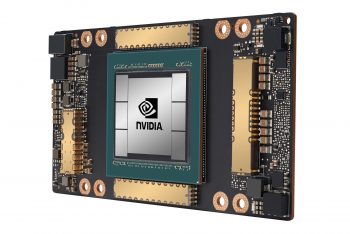

US introduces new AI chip export restrictions

NVIDIA has revealed that it’s subject to new laws restricting the export of AI chips to China and Russia.

In an SEC filing, NVIDIA says the US government has informed the chipmaker of a new license requirement that impacts two of its GPUs designed to speed up machine learning tasks: the current A100, and the upcoming H100.

“The license requirement also includes any future NVIDIA integrated circuit achieving both peak performance and chip-to-chip I/O performance equal...