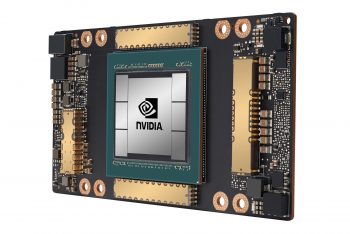

MLPerf Inference v3.1 introduces new LLM and recommendation benchmarks

The latest release of MLPerf Inference introduces new LLM and recommendation benchmarks, marking a leap forward in the realm of AI testing.

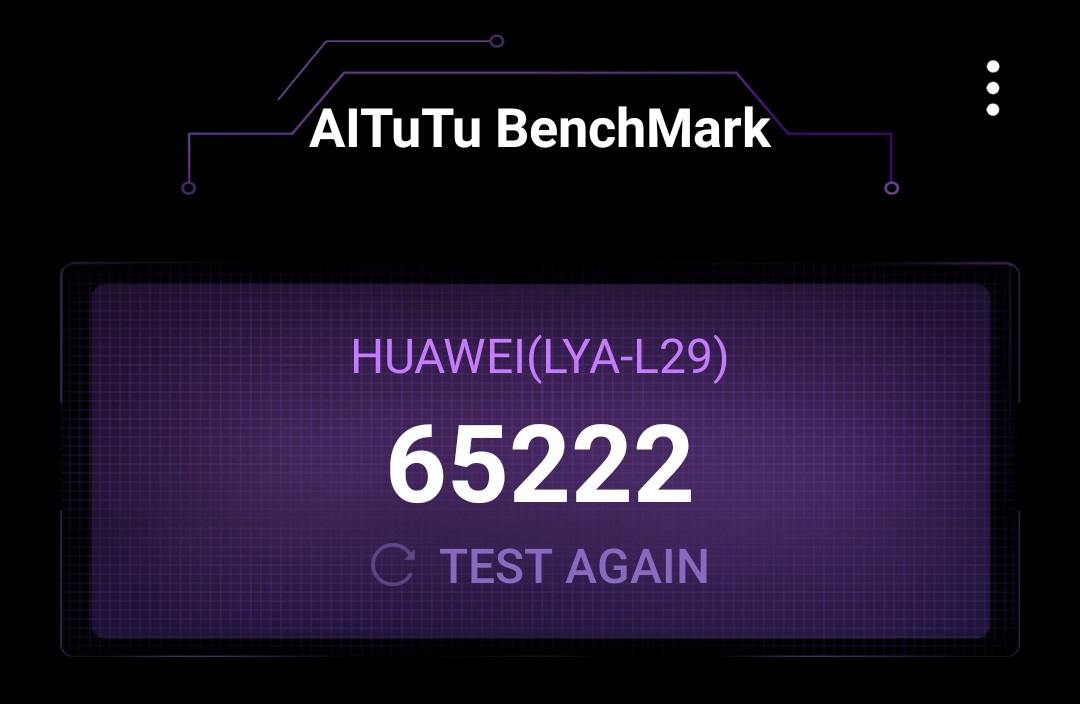

The v3.1 iteration of the benchmark suite has seen record participation, boasting over 13,500 performance results and delivering up to a 40 percent improvement in performance.

What sets this achievement apart is the diverse pool of 26 different submitters and over 2,000 power results, demonstrating the broad spectrum of...